[ad_1]

- EU lawmakers introduce a landmark law to govern the use of AI in businesses and day-to-day life

- Some uses of AI, such as using for predicting who is more likely to commit a crime, have been banned and some others have been labeled as “high-risk”

- Companies like OpenAI whose tools are available to the masses will face stricter regulation and will have to be more transparent

On Wednesday, EU lawmakers gave the final nod to a landmark law called the AI Act that will govern all AI-related concerns in the region. The Act was previously slammed by businesses and tech firms when it was first proposed last year.

Also, post a 37-hour long discussion in December 2023, the law was not expected to come into force by early 2025, but good to see that it has been fast-tracked.

The bill has been passed by a sweeping majority.

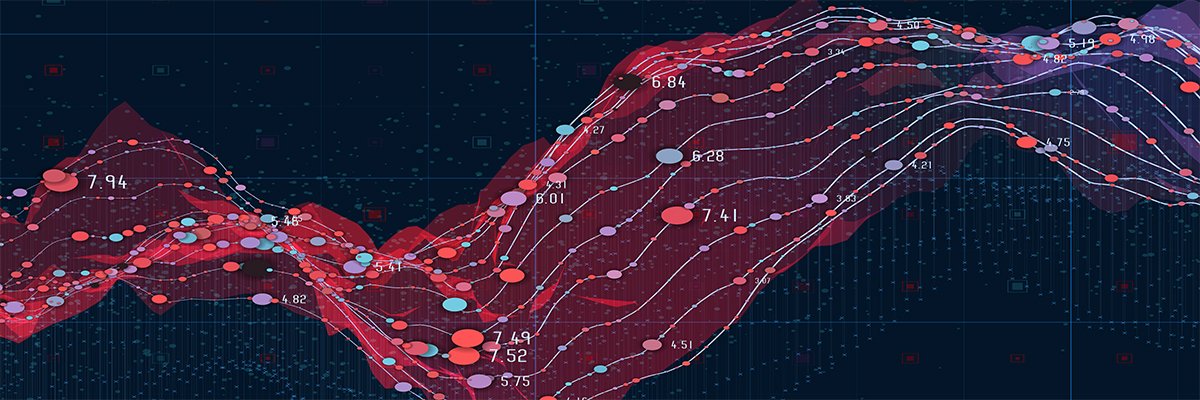

Since AI has been seeping into almost every industry, including healthcare and law enforcement, it was becoming increasingly important to have some sort of ground rules in place. This law serves that purpose.

It’s the first-of-its-kind law that will completely change how people use AI in day-to-day businesses. It aims to make AI more human-centric and ensure that we can continue to reap its benefits while avoiding the dangers.

The AI act is not the end of the journey but the starting point for new governance built around technology.MEP Dragos Tudorache

What Does The New AI Law Entail?

The AI law will be responsible for deciding how AI will be used for different purposes. Some of the uses have been banned (especially ones that violate the fundamental rights of citizens) while others that are considered high-risk have received stricter guidelines.

For instance, lawmakers have banned the use of AI for:

- social scoring

- deciding how likely a person is to commit a crime

- monitoring one’s emotions and feelings, be it at school or at the workplace

- collecting biometric data and guess a person’s race, sexual orientation, and political preference

Next comes the high-risk categories. This includes the use of AI in education, recruitment, and government services. While technically you can use AI in all the above-mentioned industries, it’ll come with an extra set of rules and will require more transparency on the developer’s part.

Popular AI companies like OpenAI that create mass AI tools will be subjected to stricter regulation. They’ll be required to maintain more transparency with the government in terms of what their tool can do.

Tools like these are often used to create manipulated pictures, so this law has made it mandatory for AI-generated images to be labeled to minimize the spread of misinformation.

Although the EU is the first to create AI-related laws, China too has started working on a draft of AI laws. Also, President Joe Biden has realized the dangers of unregulated AI use and has asked developers to share all AI-related updates with the government.

Read more: AI researchers demand to conduct safety audits on AI firms

Maqvi News #Maqvi #Maqvinews #Maqvi_news #Maqvi#News #info@maqvi.com

[ad_2]

Source link