[ad_1]

Anthropic / Benj Edwards

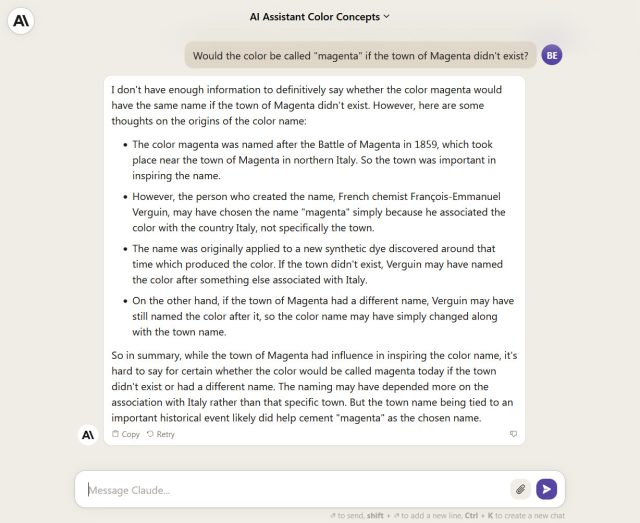

On Thursday, AI-maker and OpenAI competitor Anthropic launched Claude Pro, a subscription-based version of its Claude.ai web-based AI assistant, which functions similarly to ChatGPT. It’s available for $20/month in the US or 18 pounds/month in the UK, and it promises five-times-higher usage limits, priority access to Claude during high-traffic periods, and early access to new features as they emerge.

Like ChatGPT, Claude Pro can compose text, summarize, do analysis, solve logic puzzles, and more.

Claude.ai is what Anthropic offers as its conversational interface for its Claude 2 AI language model, similar to how ChatGPT provides an application wrapper for the underlying models GPT-3.5 and GPT-4. In February, OpenAI chose a subscription route for ChatGPT Plus, which for $20 a month also gives early access to new features, but it also unlocks access to GPT-4, which is OpenAI’s most powerful language model.

What does Claude have that ChatGPT doesn’t? One big difference is a 100,000-token context window, which means it can process about 75,000 words at once. Tokens are fragments of words used while processing text. That means Claude can analyze longer documents or hold longer conversations without losing its memory of the subject at hand. ChatGPT can only process about 8,000 tokens in GPT-4 mode.

“The big thing for me is the 100,000-token limit,” AI researcher Simon Willison told Ars Technica. “That’s huge! Opens up entirely new possibilities, and I use it several times a week just for that feature.” Willison regularly writes about using Claude on his blog, and he often uses it to clean up transcripts of his in-person talks. Although he also cautions about “hallucinations,” where Claude sometimes makes things up.

“I’ve definitely seen more hallucinations from Claude as well” compared to GPT-4, says Willison, “which makes me nervous using it for longer tasks because there are so many more opportunities for it to slip in something hallucinated without me noticing.”

Ars Technica

Willison has also run into problems with Claude’s morality filter, which has caused him trouble by accident: “I tried to use it against a transcription of a podcast episode, and it processed most of the text before—right in front of my eyes—it deleted everything it had done! I eventually figured out that we had started talking about bomb threats against data centers towards the end of the episode, and Claude effectively got triggered by that and deleted the entire transcript.”

What does “5x more usage” mean?

Anthropic’s primary selling point for the Claude Pro subscription is “5x more usage,” but the company doesn’t clearly communicate what Claude’s free-tier usage limits actually are. Dropping clues like cryptic breadcrumbs, the company has written a support document about the topic that says, “If your conversations are relatively short (approximately 200 English sentences, assuming your sentences are around 15–20 words), you can expect to send at least 100 messages every 8 hours, often more depending on Claude’s current capacity. Over two-thirds of all conversations on claude.ai (as of September 2023) have been within this length.”

In another somewhat cryptic statement, Anthropic writes, “If you upload a copy of The Great Gatsby, you may only be able to send 20 messages in that conversation within 8 hours.” We’re not attempting the math, but if you know the precise word count of F. Scott Fitzgerald’s classic, it may be possible to glean Claude’s actual limits. We reached out to Anthropic for clarification yesterday and have not received a response before publication.

Either way, Anthropic makes it clear that for an AI model with a 100,000-token context limit, usage limits are necessary because the computation involved is expensive. “A model as capable as Claude 2 takes a lot of powerful computers to run, especially when responding to large attachments and long conversations,” Anthropic writes in the support document. “We set these limits to ensure Claude can be made available to many people to try for free, while allowing power users to integrate Claude into their daily workflows.”

Also, on Friday, Anthropic launched Claude Instant 1.2, which is a less expensive and faster version of Claude that is available through an API. However, it’s less capable than Claude 2 in logical tasks.

While it’s clear that large language models like Claude can do interesting things, it seems their drawbacks and tendency toward confabulation may hold them back from wider use due to reliability concerns. Still, Willison is a fan of Claude 2 in its online form: “I’m excited to see it continue to improve. The 100,000-token thing is a massive win, plus the fact you can upload PDFs to it is really convenient.”

[ad_2]

Source link